Using Azure AI to Protect Payloads

- Repo Link: Link

Keeping our payloads out of cloud sandboxes has been an aim of red teamers for years. We use a variety of combinations such as time of day, user agent, hiding behind a clicky-button, browser and source IP address. However, tightening up these guardrails can often result in missed shells or opportunities.

Wouldn’t it be nice if we could guarantee that it was a human who was viewing our link, and if it wasn’t a human, we could send them off to notmalicious.com instead?

We can even hide our HTML smuggle behind this mechanism to keep it away from ProofPoint and similar cloud proxies / sandboxes.

Welcome to Azure FaceAI

Microsoft have a variety of public AI products across GA and Preview. One of them that is freely available for our use is FaceAI. This is different to Computer Vision which has much more extensive functionality and is designed for boundary boxes, counting people and other similar use cases. Microsoft have signed up to some Responsible AI principles which restrict certain functionality of FaceAI to registered and vetted tenants – these features include direct facial recognition such as ‘this user is 100% John Doe, because it matches the copy of John’s ID or verification photo that we have on file‘.

If we could get access to this additional featureset, we could collate a series of photos of our target user from the internet or social media and store them in a storage account. Then send the target user an email which is protected behind facial validation. This would guarantee that the phishing link is only being seen by the exact user we want to target, rather than any other human user.

Comparing against particular users that you have stored imagery of also brings compliance and data protection issues, and doesn’t seem worth the additional steps needed for the vast majority of use cases (including this POC).

If we don’t have the time or willingness to undergo these checks, we can still progress by using the free and unvetted FaceAI API. This is done through simply checking that there is a valid facial user via the webcam. Plenty of ‘background check update’, DocuSign or similar identity based pretexts will fit this technique.

Build It

First off we need a FaceID resource, which is easily created in the Azure Portal via the search bar at the top. Select the Free SKU (take note of the limits) and note the URL and API keys from Keys and Endpoint.

The usual safeguards around ensuring the FaceAI resource runs as least privilege, and is adequately network protected apply here. By default the API will be internet-accessible, but can be easily restricted to Private Endpoints or particular subnets if your use case fits.

Python App

Within the repo is a simple Flask application that acts as a suitable POC for this technique. You can test this locally after populating your .env/appsettings.json file with the URL and API key.

cat appsettings.json

{

"FACE_ENDPOINT": "https://your_url_here.cognitiveservices.azure.com",

"FACE_APIKEY": "key_goes_here",

"WEBSITES_PORT": "8000",

"PUSHOVER_USER_KEY": "wibble",

"PUSHOVER_API_TOKEN": "dibble"

}

This can be tested locally and styled with python app.py from within the directory. It will listen on http://localhost:8000.

Within the Python file is a simple way to increase the frequency of the image snapshot taken via the webcam (be conscious of API limits), as well as some simple demonstrations on how to style the page so it looks target-suitable.

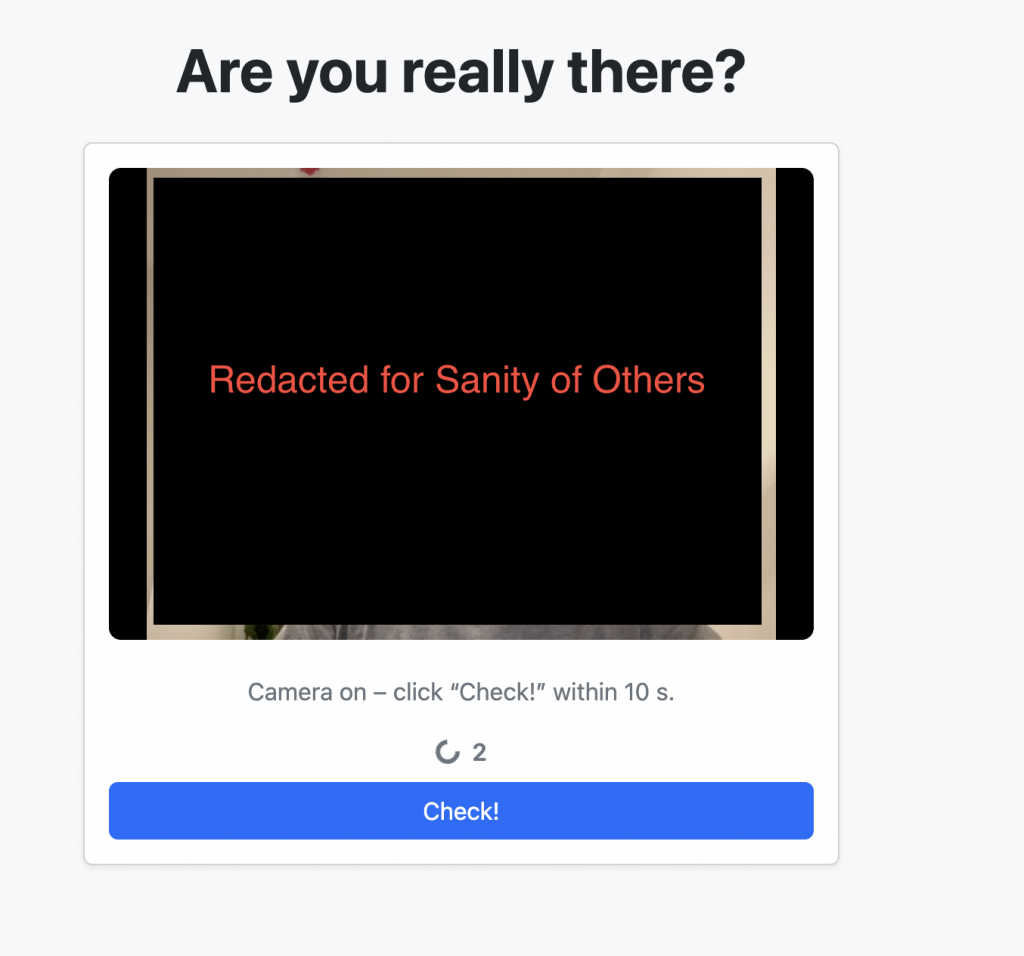

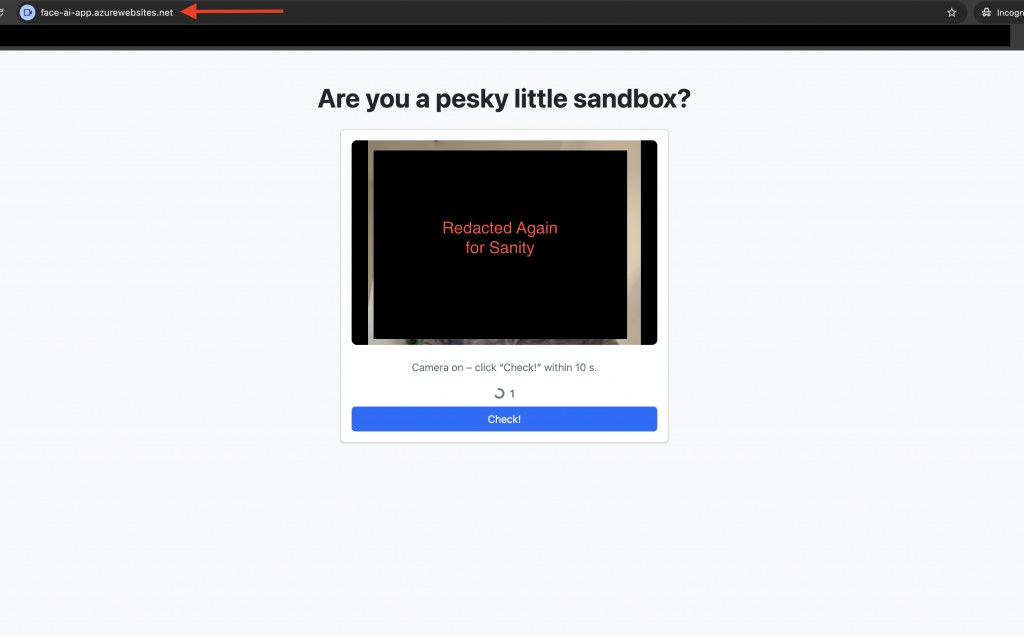

Open the web page and approve the webcam permission within the browser. The parts of the UI are self explanatory.

Logic

After a attacker-configurable 10 seconds, the page is redirected to https://google.com as the App believes they are a sandbox, or the user has not followed through with clicking the Check! button.

If they click Check! within 10 seconds, a webcam image is sent to the FaceAI API; if a face is detected, the user is redirected to https://foo.com, aka https://maliciouspayload.com. This is all simply changed within the Python file.

Regardless of the outcome, you will get a Pushover notification with Pass or Fail (and suitably happy or sad Pushover sounds!).

Logging

Within the stdout of the Python you can see the requests to the AzureAPI but not the responses, as well as those logs only persisting for the life of the Python run.

INFO: Started server process [15199]

INFO: Waiting for application startup.

INFO: Application startup complete.

INFO: 127.0.0.1:62999 - "POST /api/detect-face HTTP/1.1" 200 OKFor more real-world usage, we need to collect the timestamps, user agents and source IP address. This is done to /home/detections.csv by default. This path is chosen because it is persistent across Azure Web App deployments and can be retrieved via FTP in the console.

You can also retrieve the logs easily through the AZ CLI SSH functionality:

az webapp ssh \

--name face-ai-app \

--resource-group faceai

[30 seconds later.....]

(antenv) root@87c9e0421f2d:/tmp/8dd7f326f7d47dd# pwd

/tmp/8dd7f326f7d47dd

(antenv) root@87c9e0421f2d:/tmp/8dd7f326f7d47dd# cat detections.csv

2025-04-19T11:18:07.395972,False,0,172.16.0.1,"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/135.0.0.0 Safari/537.36"

2025-04-19T11:18:16.154275,True,1,172.16.0.1,"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/135.0.0.0 Safari/537.36"Sample log output can be found here:

cat detections.csv

timestamp,facedetected,clarity,sourceip,useragent

2025-04-18T16:51:40.697518,True,1,127.0.0.1,"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/135.0.0.0 Safari/537.36"

2025-04-18T16:51:50.226753,True,1,127.0.0.1,"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/135.0.0.0 Safari/537.36"

2025-04-18T16:51:56.067282,False,0,127.0.0.1,"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/135.0.0.0 Safari/537.36"Terraform

Clicking around in a Portal is a chronic timesink, so in the repo for this post is also some very simple Terraform code that will deploy the resources needed. You can then deploy your client-facing webapp to the Azure Web App service in the usual myriad of ways – Portal, TF or AZ CLI.

Check the Terraform directory for sample code, however you will need the first Face resource you deploy to be via the Portal so you can agree to the T&C as shown here.

Deploy to Azure Easily without Terraform

You can obviously deploy this to any cloud provider’s serverless equivalent for hosting and just API-call to Azure FaceAI, but we are using Azure in this example. There are plenty of ways to get an App running in Azure, this is just one, and always ends up with the files being in the right place in the container.

Make sure you use B1 SKU, as that is the minimum to prevent your App being powered down for inactivity.

zip -r app.zip app.py requirements.txt static/ -x "*.venv*" "*.git*" "__pycache__/*" "*.DS_Store" "Terraform/*" ".azure"

az webapp up \

--name face-ai-app \

--resource-group faceai \

--location ukwest \

--runtime "PYTHON|3.11" \

--os-type Linux \

--sku 'B1'

az webapp config appsettings set \

--name face-ai-app \

--resource-group faceai \

--settings @appsettings.json

az webapp config appsettings set \

--resource-group faceai \

--name face-ai-app \

--settings SCM_DO_BUILD_DURING_DEPLOYMENT=true # Tell Az to install reqs.

az webapp config set \

--resource-group faceai \

--name face-ai-app \

--startup-file "python app.py"

az webapp config set \

--resource-group faceai \

--name face-ai-app \

--always-on true # This stops Az powering down your container for idle time and you missing a shell!

az webapp deploy \

--resource-group faceai \

--name face-ai-app \

--src-path app.zip \

--type zip

az webapp restart \

--name face-ai-app \

--resource-group faceaiWhich should give you the below output:

You can check the env vars applied OK in the Environment Variables section of the App Service Plan in the Portal.

And that’s it, I think this could be fairly difficult to defend against in the short term as it is a new problem for sandboxes to contend with, and provided the initial domain is considered-safe, the redirection or file download can be hidden from inspecting proxies.